LNG’s Tax Break Controversy

August 5, 2025 § Leave a comment

A story is breaking that Cheniere Energy is applying retroactively for an alternative fuel tax credit for using “boil off” natural gas for propulsion of their liquefied natural gas (LNG) tankers in the period 2018 to 2024, the year that tax break expired. The story leans towards discrediting the merits of the application. Before we get into that, first some basics.

LNG is natural gas in the liquid state. In this state it occupies a volume 600 times smaller than does free gas, thus making it more amenable to ocean transport. It achieves this state by being cooled down to – 162 degrees C. Importantly, it is kept cool not by conventional refrigeration, but by using the latent heat of evaporation of small quantities of the liquid. If the resultant gas is released to the atmosphere, it is a greenhouse gas 80 times more potent than CO2 over 20 years. In recent years, tanker engines have been repurposed to burn natural gas. The “boil off” gas, as it is referred to, is captured, stored and used for motive power. As with most involuntary methane release situations, capture has the dual value of economic use and environmental benefit. In the case of LNG tanker vessels, the burn-off can handle most of both legs of the voyage. Short haul LNG trucks also have boil off gas, and it is unlikely that the expense of recovery and dual fuel engines is incurred.

Also, by way of background, Cheniere Energy is a pioneer in LNG. It began with their construction of import terminals in the 2008 timeframe. Shortly after that US shale gas hit its stride and LNG imports evaporated. This was followed by US shale gas becoming a viable source of LNG export, and Cheniere again took the lead in pivoting to convert import terminals to export capability. Today they are the leading US exporters.

Now to the merits of considering boil off natural gas as an alternative fuel. The original intent of the law, which expired in 2024, appears to have been to encourage substitution of fuel such as diesel with a cleaner burning alternative. However, the letter of the law limits this to surface vehicles and motorboats. LNG vessels are powered by steam (using a fossil fuel or natural gas), and more lately by dual fuel engines using boil off gas and a liquid fuel ranging from fuel oil to diesel. Using a higher proportion of boil off gas certainly is environmentally favorable, mostly because sulfur compounds will essentially not be present and particulate matter will be vastly lower than with diesel or fuel oil. If this gas was not used for power, hypothetically, it would be flared, leading to CO2 emissions and possibly some unburnt hydrocarbons. Capture and reuse provide an economic benefit, so should it qualify as an alternative fuel?

An analog in oil and gas operations could be instructive. Shale oil can be expected to have associated natural gas because light oil tends to do that because of the mechanism of formation of these molecules. Heavy oil, for example, could be expected to have virtually no associated gas. When oil wells are in relatively small pockets and/or in remote locations and because of the relatively small volumes or remoteness, export pipelines are not economic. This gas is flared on location. Worldwide 150 billion cubic meters was flared in 2024, an all-time high. Companies such as M2X Energy capture this and convert it to useful fuel such as methanol, and in the case of M2X the process equipment is mobile. The methanol thus produced could be considered green because emissions of CO2 and unburnt alkanes would be eliminated.

Were that to be the case, the use of boil off gas has some legs in consideration of it being an alternative fuel. However, the key difference in the analogy is that in the case of the LNG vessel, an economic ready use exists. Not so in the remotely located flared gas. But is an economic ready use a bar for consideration? Take the example of CNG or LNG replacing diesel in trucks. They likely quality for the credit. The tax break approval may well come down to a hair split on the definition of motorboat. An LNG vessel is certainly a boat, and has a motor, but does not neatly classify as a motorboat in the parlance*. But if the sense of the law is met, ought the letter of the law prevail?

Vikram Rao

*That which we call a rose, by any other name would smell as sweet, Juliet in Romeo and Juliet, Act II, Scene II (1597), written by W. Shakespeare.

The Frac’ing Dividend

June 29, 2025 § Leave a comment

Few will dispute the fact that the US was a net importer of natural gas in 2007. Cheniere Energy was gearing up to import liquefied natural gas (LNG) and deliver it to the country. Then natural gas from shale deposits became economic and scalable. How Cheniere reinvented their business model by pivoting to convert their re-gas terminals to become LNG producers is a story for another time. As is the tale of shale gas singlehandedly lifting the US out of recession. Low-cost energy is a tide that lifts all boats of economic prosperity, and this one sure did.

The story for today is that the technologies that enabled shale gas production are showing up as key enablers for geothermal energy, the leading carbon- free energy source which can operate 24/7/365, unlike solar and wind, which are intermittent, with capacity factors (roughly defined as the portion of time spent delivering electricity) of up to 25% and 40%, respectively. The short duration intermittency (frequently defined as under 10 hours) is covered by batteries. But for longer periods, the most promising gap fillers are geothermal energy, small modular (nuclear) reactors and innovative storage means. Of these, the furthest along are geothermal, as represented by advanced geothermal systems (AGS), some battery systems, and hydrogen from electrolysis of water using power when not needed by the grid.

The most commercially advanced AGS variant is that of Fervo Energy. It employs two parallel horizontal wells intended to be in fluid communication. Hydraulic pressure is used to create and propagate fractures from the injector well towards the induced fractures at the producer well. Fluid is pumped into the injector well and flows through the fracture network into the producer well. Along the way, it heats up while traversing hot rock. This hot fluid is used to generate electricity. It may also be used for district or industrial heating. The heat in the rock is replenished by heat transfer from near the center of the earth, where it is created by the decay of radioactive substances. The center of the earth is at temperatures close to that of the sun.

The two key enabling technologies for accomplishing the process described above are those of horizontal drilling (and drilling a pair reasonably parallel to each other) and hydraulic fracturing, known in industrial parlance as “frac’ing”. Clever modeling (from a co-founder’s PhD thesis at Stanford) dictates many operational parameters such as optimal well separation, but the guts of the operation is that employed in shale oil and gas production. With one difference. The temperatures are greater than in most conventional shale operations. This affects the drilling portion more than the fracturing one. Temperatures will be greater than 180 C in AGS operations, and in excess of 350 C in the so-called closed loop systems (which have no fracturing involved, except for one minor variant). Even in AGS systems, higher temperatures are preferred, and Fervo recently demonstrated operations at 250 C. While this stresses industry capability, it still falls firmly in oil and gas competency and the geothermal industry can rely upon this rather than attempt to invent in that space.

The frac’ing dividend mentioned in the title of this piece refers to advances in frac’ing operations which accrue directly to the benefit of AGS operations. A key one is the ability to use “slick water”, which is fracturing fluid with little to no chemicals. Opponents of shale gas operations have often cited the possibility of surface release of these chemicals as a concern. Similarly, accidental surface release of hydrocarbons in fracturing fluid has been a concern but is irrelevant here because of the complete absence of hydrocarbons in the rock being drilled. AGS operations do have the possibility of induced seismicity. This is where the pressure wave from the hydraulic pressure potentially energizes an active fault, if present in proximity, and the resulting slip (movement between rock segments) causes a sound wave in the seismic range. However, the risk is small, especially if the activity is in a naturally fractured zone, in which fractures can be propagated at lower induced pressures than in unfractured rock. In any event, competent operators such as Fervo are placing observation wells and to date induced seismicity has not been a concern. The sound heard is that of (relative) silence*.

Finally, the shale oil and gas industry devised economies of scale by placing multiple wells on each “pad”. These techniques, including elements such as the rigs moving swiftly on rails, are directly applicable to AGS systems using frac’ing. So, any reported economics of single well pairs could, in my opinion, be improved by up to 40% when tens of well pairs are executed on a single pad.

This is the big frac’ing dividend.

Vikram Rao

June 29, 2025

*And no one dared disturb the sound of silence, from The Sound of Silence, performed by Simon and Garfunkel, 1965, written by Paul Simon.

Wildfires: Pain Now, Even More Later

March 16, 2025 § 1 Comment

A March 14 story in the New York Times describes research efforts to quantify health outcomes from the Altadena fire. Once you get past the “toxic soup” sensationalism in the title, the content is good in identifying the long-term killer: ultrafine particles which can enter cells, cross the blood brain boundary, thus causing ailments ranging from cancer to dementia. The near-term privation wrought by fires, especially ones such as this one, close to urban areas, is overt. The long-term effects tend to get discounted, much the same as long term profits in business. But they are real, and worse for fires proximal to populated areas. Here’s why.

Forest fires usually originate and burn in remote areas. Direct impact is usually on small communities. They are evacuated and the burn is contained as well as possible. However, when they do occur close to major metropolitan areas, a somewhat different set of rules appear to apply. The priority is the protection of people and property. While this may appear to be a truism, it can lead to unintended consequences. Fierce burns are subdued to smolders and attention is shifted to the next fierce burn. This certainly achieves the priority purpose and may be the only practical way to achieve it, given limited resources.

However, in terms of effects on human health, all fires are not created equal. A fierce fire is one where oxygen is available for a complete burn of the flammable material. This principle applies to the burner on your stove as well. A complete burn is desirable on your stove to give maximal heat and to minimize unburnt hydrocarbons (you should shoot for a blue flame, not yellow). Same for forest fires. Parts of the tree, especially the bark, has aromatic (organic) molecules. An incomplete burn leads to the organic molecules to be released into the air. This matters because these molecules are directly injurious to human health. There is another, somewhat more insidious, negative. These molecules coat the carbon particles (soot) emitted, making them much more toxic.

Forest Fire, painting, courtesy Falguni Gokhale,

The importance of being small (with apologies to Oscar Wilde). Finally, soot particles are more toxic when they are small. Particles below about 100 nanometers (nm) are classified as nanoparticles. In public health circles these are known as ultrafine particles. Whereas larger particles impair respiratory pathways, ultrafine particles go a step further. They can enter cells and are transported over the body. They are known to cross the blood brain barrier and are strongly implicated in exacerbating dementia.

As if this were not bad enough, ultrafine particles are particularly prone to deposition of organic molecules on their surface. This is because they have a large surface area for their mass, and that ratio is known to make them more reactive, a property that is used beneficially in chemical engineering. Here, it is all bad. Toxic organic molecules are now attached to particles which readily enter cells. Game, set and heading to match.

Now to another negative attached to a smoldering burn as opposed to a fierce one. A smolder leads to a relatively high proportion of ultrafine particles when compared to a complete burn. A study of the Getty Fire in 2019 demonstrated this effect. This too was a fire near dense housing, a couple of miles from UCLA and very close to the Getty Museum. The study found that the median particle diameter of the particles was 130 nm in the flaming state and dropped to 40 nm in the smoldering state. Potentially confounding variables were eliminated in arriving at this conclusion.

The inescapable inference is that smoldering fires are a greater health risk than flaming ones because they create ultrafine particles and emit volatile organic compounds which preferentially attached to the ultrafine particles. Admittedly, the priority on dousing the flames is well placed, but more attention ought to be paid to putting out the smolders.

The Getty Fire, and several other large ones in California, were started by tree limbs falling on power lines, sparking them and igniting the underbrush. This brings into focus the fact that high voltage power lines are bare, devoid of insulation. No practical solutions exist to insulate the lines. Underground lines are found in communities but are not feasible across forests. Chalk one up for distributed power.

Toxicity of fire emissions is also a function of the type of wood. Lung toxicity studies demonstrated that eucalyptus was by far the worst, followed by red oak and pine. This is bad news for Australians, where the species is native. But California has it in abundance because it was imported as a construction wood for its characteristics of fast growth and drought tolerance. The use in construction did not pan out (brought the wrong variety) but eucalyptus loved the west coast setting and is now considered an invasive species and highly prevalent.

One final point regarding ultrafine particulate emissions from wildfires. These can, and do, travel across the continent. The smaller the particles, the further they will travel. Ergo, the most toxic particles impact much of the nation, not just the areas close to the fires.

And the pain in the form of disease will be felt long after the fires are a distant memory *.

Vikram Rao

March 16, 2025

*All my troubles seemed so far away, now it looks as though they’re here to stay, from Yesterday, performed by The Beatles (1965), written by John Lennon and Paul McCartney.

Are Cows Getting a Free Pass on Methane Emissions?

January 20, 2025 § Leave a comment

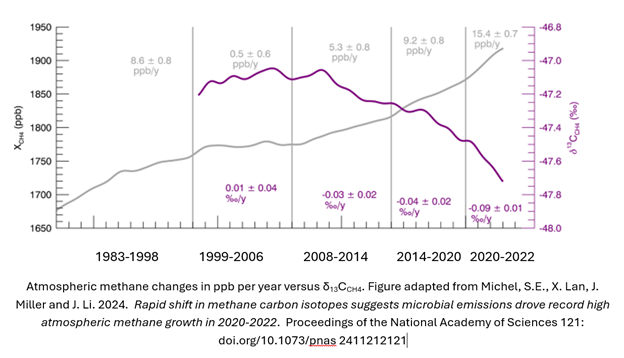

A recent study reported in the Proceedings of the National Academy of Sciences1 convincingly shows that atmospheric methane increases in the last 15 years can be attributed primarily to microbial sources. These comprise ruminants (cows in the main), landfills and wetlands. Yet, policy action on methane curbing has largely been focused on leakage in the natural gas infrastructure. In the US as well as in Canada, policies have fallen short of comprehensive action in the agricultural sector2,3.

Before we discuss the study, first some (hopefully not too nerdy) basics. Methane has the chemical formula CH4. The common variety designated 12CH4, has 6 protons and 6 neutrons in the nucleus. An isotope, 13CH4, has an additional neutron. The 13CH4 to 12CH4 ratio is used to detect the origin of the CH4. The actual ratio is compared to that of a marine carbonate, an established standard. The comparison is expressed as δ13CCH4 with units ‰ and is always a negative number because all known species have a lower figure than that of the standard carbonate. Microbially sourced methane will have ratios of approximately -90 ‰ to -55 ‰ and methane from natural gas will be in the range -55 ‰ to -35 ‰. This is the measure used to deduce the source of methane in the atmosphere.

Here is the lightly adapted main figure from the cited study. The data are primarily from The National Oceanic and Atmospheric Administration’s Global Monitoring Laboratory, but as shown in the paper, similar results have been observed from other international sources. The gray line is the atmospheric methane, shown as increasing steadily over decades, but with steeper slopes in the near years. The steeper portion is roughly consistent with the period in which the isotopic ratio becomes increasingly negative. This implies more negative contribution, which in turn means that the main contributory species is microbial. Note also the increased severity of the trend in 2020-2022, and coincidentally or not, increased methane in atmosphere slope in those years. The paper authors do not see the correlation as coincidental. They emphatically state: our model still suggests the post-2020 CH4 growth is almost entirely driven by increased microbial emissions.

A quick segue into why methane matters. The global warming potential of methane is 84 times that of CO2 when measured over 20 years, and 28 times when measured over 100 years. Climatologists generally prefer to use the 100-year figure (and I used to as well), but urgency of action dictates that the 20-year figure be used. The reason for the difference is that methane breaks down gradually to CO2 and water, so it is more potent in the early years.

These research findings point to the need for policy to urgently address microbial methane production. This does not mean that we let up on preventing natural gas leakage, the means to do which are well understood. The costs are also well known and, in many cases, simply better practice achieves the result. In fact, the current shift to microbial methane being a relatively larger component could well be in response to actions being taken today to limit the other source. But it does mean that federal actions must target microbial sources more overtly than in the past. We will touch on a few of the areas and what may be done.

Landfill gas can be captured and treated. In the US, natural gas prices may be too low to profitably clean landfill methane sufficiently to be put on a pipeline. Part of the problem is that, due to impurities such as CO2, landfill methane has relatively low calorific value, almost always well short of the 1 million BTU per thousand cubic feet standard for pipelines. However, technologies such as that of M2X can “reform” this gas to synthesis gas, and thence to methanol, and a small amount of CO2 is even tolerated (Disclosure: I advise M2X).

Methane from ruminants (animals with four-compartment stomachs tailored to digest grassy materials) is a more difficult problem. Capture would be operationally difficult. The approach being followed by some is to add an ingredient to the feed to minimize methane production. Hoofprint Biome, a spinout from North Carolina State University, introduces a yeast probiotic to carry enzymes into the rumen to modify the microbial breakdown of the cellulose with minimal methane production. I would expect this more efficient animal to be healthier and more productive (milk or meat). Nailing down of these economic benefits could be key to scaling, especially for dairies, which are challenged to be profitable. Net-zero dairies could be in our future.

Early-stage technologies already exist to capture methane from the excrement from farm animals such as pigs. These too could take approaches similar to those proposed for landfill gas, although the chemistry would be somewhat different. Several startups are targeting hydrogen production from pyrolysis of methane to hydrogen and carbon. The latter has potentially significant value as carbon black, for various applications such as filler in tires, and biochar as an agricultural supplement. If the methane is from a source such as this, the hydrogen would be considered green in some jurisdictions.

The federal government ought to make it a priority to accelerate scaling of technologies that prevent release of microbial methane into the atmosphere. With early assists, many approaches ought to be profitable. Then it would be a bipartisan play*.

Vikram Rao

*Come together, right now, from Come Together, by The Beatles, 1969, written by Lennon-McCartney

1 Michel, S.E., X. Lan, J. Miller and J. Li. 2024. Rapid shift in methane carbon isotopes suggests microbial emissions drove record high atmospheric methane growth in 2020-2022. Proceedings of the National Academy of Sciences 121: doi.org/10.1073/pnas 2411212121

2 Patricia Fisher https://fordschool.umich.edu/sites/default/files/2022-04/NACP_Fisher_final.pdf

3 Ben Lilliston 2022 https://www.iatp.org/meeting-methane-pledge-us-can-do-more-agriculture

The Bulls Are Running in Natural Gas

January 2, 2025 § Leave a comment

Ukraine shut down the natural gas pipeline from Russia to southern Europe yesterday. While not unexpected, yet another red rag for natural gas bulls. And ascendency for liquefied natural gas (LNG) futures and associated increase in US influence on Europe because, according to the Energy Information Administration, most of new LNG supply in the world will be from the US. Of course, that means more fodder for the debate in the US on whether LNG exports will increase domestic prices more than mere price elasticity with demand. I see greater demand impact from a different source, but more on that below.

Natural gas usage will not be in decline anytime soon. In fact, usage will steadily increase for the next couple of decades. Much of the incremental usage will be for electricity production, with a business model twist: expect a trend to captive production “behind the meter”. Not having to deal with utilities will speed introduction. Of course, the entire production will have guaranteed offtake, but that will not be much of a hurdle for some of the deep pocketed applications owners.

So, what has changed? Why are fossil fuels not in decline in preference to carbon-free alternatives? Much of the answer is that all fossil fuels are not created equal. Ironically, oil and gas are created by precisely the same mechanism, but their usage and the associated emissions are horses of different colors. At some risk of oversimplification, oil is mostly about transportation and natural gas is mostly about electricity and space heating.

At a first cut, oil usage will reduce when carbon-free transport fuel alternatives take a hold. Think electric vehicles, methanol powered boats, biofuels for aviation and so forth. Similarly, natural gas usage reduction relies upon rate of growth of carbon-free electricity (note my use of carbon-free instead of renewable), which today is almost all solar and wind based. Advanced geothermal is nascent and nuclear is static except for some rumblings among small modular reactors (SMRs).

In the case of oil in transportation, when the switch to battery or hydrogen power arrives, oil will be fully displaced from that vehicle. Not counting hybrids in this discussion, nor lubricating oils. In electricity, however, solar and wind power being intermittent, some other means are needed to fill the gaps. Those means are dominantly natural gas powered today for longer duration (greater than 10 hours). For short durations, and diurnal variations, batteries get the job done for around 2 US cents per kWh. Very affordable, and unlikely to change. To underline the point, solar and wind need natural gas for continues supply. Until alternatives such as long duration storage, geothermal or SMRs make their presence felt, every installation of solar or wind increases natural gas usage.

As if that were not bad enough, a recent complication is increasing electricity demand. Artificial Intelligence, or AI, and to a greater extent the Generative AI variant, has increased electricity demand dramatically. For example, a search query uses 10 times the energy when employing Gen AI, compared to a similar conventional search. The information is presumably more useful, but the mere fact is that these searches and other applications such as in language, are power hogs. The data center folks are trolling for power in geothermal and nuclear. Microsoft went so far as to commission the de-mothballing of the 3 Mile Island conventional nuclear facility. Control room picture shows its age. The operators will face what an F18 pilot would, if asked to fly an F14 (apologies, just saw Top Gun Maverick film).

Almost all the big cloud folks want to use carbon-free power, 24/7/365. Good luck getting that from a utility. Some, such as Google with Fervo Energy geothermal, are enabling supply to the grid and capturing the credit. Others are going “behind the meter”, meaning captive supply not intended for the grid. The menu is geothermal, SMRs and innovative storage systems. All have extended times to get to scale. Is there an option that is more scalable sooner to suit the growth pattern of AI?

Natural gas. A combined cycle plant (electricity both from a gas turbine and from a later in cycle steam turbine) could be constructed in less than 18 months. Carbon capture is feasible, even though the lower CO2 concentrations of 3 to 5% (as compared to 12 to 15% for coal plants) makes it costlier (a reason I am bearish on direct air capture, with 0.04% concentration). At the current state of technology, I estimate that will add 3 to 4 cents per kWh. This technology will keep improving, but that number is already worth the price of admission, at least in the US, where the base natural gas price is low. Not renewable, but nor is nuclear. You see why I prefer the carbon-free language?

To be behind the meter, the plant would need to be proximal to the data center. Data centers prefer cool weather siting for ambient heat discharge. Reduces power usage. Since natural gas pipelines serve a wide area, this ought not to be a major constraint. However, it could favor producers in the northern latitudes, especially if a rich deposit is currently unconnected to a major pipeline. Favorable deals could be struck especially with long term offtake contracts in part because the gas operator will eliminate the markup by the midstream operator. These conditions could be met in Wyoming, Alberta (Canada) and, of course, Alaska.

We used to refer to natural gas as a bridge to renewables, until that phraseology fell out of vogue. The thinking was that gas could replace coal to provide some CO2 emissions relief (and it did that for the US), and eventually be replaced by renewable energy. The model suggested above does not fit that definition. Those plants will likely not be replaced because they would be essentially carbon neutral. And they would enable a powerful new technology the foundations of which already have been awarded the 2024 Nobel Prize in Physics. Gen AI may well lose some of its luster, but the machine learning underpinnings will survive and continue to deliver. All that will need data crunching. More data centers are firmly in our future.

Those that are still inclined to teeth gnashing on emissions from natural gas production ought to ponder nuclear spent fuel disposal, mining for silica for solar panels and the problem with disposal of disused wind sails, to name just a few. Every form of energy has warts*. We simply need to minimize them.

Vikram Rao

January 2, 2025

*Every rose has its thorn, from Every Rose Has Its Thorn, 1988, performed by Poison, written by Brett Michaels et al.

Advanced SMRs: No Fuss, (Almost) No Muss

December 27, 2024 § Leave a comment

The potentially catastrophic condition that a nuclear reactor can encounter is overheating leading to melt down of the core. Conventional reactors need active human or automatic control intervention. These can go wrong, as they did in the 3 Mile Island accident. Small modular (nuclear) reactors (SMRs) are designed to share the trait of passive cooling down (automatic, without intervention) in the event of an upset condition. SMR designs to achieve this control differ, but all fall in the class of intrinsically safe, to use terminology from another discipline. This is the no fuss part.

The muss, which is harder to deal with, entails the acquisition and use of fissile nuclei (nuclei which can sustain a fission reaction), and then the disposition of the spent fuel. Civilian reactors use natural uranium enriched in fissile U-235 to up to 20%. At concentrations greater than that, theoretically a bomb could be constructed. The most common variant, the pressurized water reactor (PWR), uses 3 to 6% enrichment. Sourcing enriched uranium is another issue. Currently, Russia supplies over 35% of this commodity to the world. The US invented the technology but imports most of its requirement.

In all PWRs and most other reactors, nearly 90% of the energy is still left unused in the spent fuel (fuel in which the active element is reduced to impractical concentrations) in the form of radioactive reaction products. Recycling could recover the values, but France is the only country doing that. The US prohibited that until a few decades ago, for fear that the plutonium produced could fall into the wrong hands. Geological storage is considered the preferred method but runs into local opposition at the proposed sites, although an underground site in Finland is ready and open for business.

One class of reactors that defers the disposal problem, potentially for decades, is the breeder reactor. The concept is to convert a stable nucleus such as natural uranium (U-238) or relatively abundant thorium (Th-232) to fissile Pu-239 or U-233, respectively. The principal allure, beyond the low frequency of disposal, is that essentially all the mineral is utilized without expensive enrichment. In both cases, the fuel being transported is more benign, in not being fissile. One variant uses spent fuel as the raw material for fission. The reactor is the recycling means.

At a recent CERA Week event, Bill Gates drew attention to TerraPower, an SMR company that he founded. For the Natrium (Latin for sodium) offering, which combines the original TerraPower Traveling Wave Technology (TWR) with that of GE Hitachi, the coolant is liquid sodium (they are working on another concept which will not be discussed here). Using molten metal as a coolant may appear strange, but the technical advantage is the high heat capacity. The efficacy of this means was proven as long ago as 1984, when in the sodium-cooled Experimental Breeder Reactor-II at Idaho National Laboratory, all pumps were shut down, as was the power. Convection in the molten metal shut down the reactor in minutes. That reactor operated for 30 years. So, that aspect of the technology is well proven. TerraPower’s 345 MWe Natrium reactor, which broke ground in Wyoming earlier in 2024, is not technically a breeder reactor, although it utilizes fast neutrons, which is helped by the coolant being Na, which slows neutrons down less than does water (the coolant in PWRs). Natrium uses uranium enriched to up to 19% as fuel.

Natrium has two additional distinguishing features. The thermal storage medium is a nitrate molten salt, another proven technology in applications such as solar thermal power, where it is an important attribute to provide power when the sun is not shining. For an SMR, the utility would be in pairing with intermittent renewables to fill the gaps. Their business model appears to be to deliver firm power with a rated capacity of 345 MWe and use the storage feature to deliver as much as 500 MWe for over 5 hours. In general, the unit could be load following, meaning that it delivers in sync with the demand at any given time.

The most distinctive feature of the Natrium design is that the nuclear portion and all else, including power generation, are physically separated on different “islands”. This is feasible in part because the design has the heat from the molten sodium transferred by non-contact means to the molten salt, which is then radiation free when pumped to the power generation island. The separation of nuclear and non-nuclear construction ought to result in reduced erection (and demobilization) time and cost. Of course, sodium-cooled reactors are inherently less costly because they operate at ambient pressures, and the reactor walls can be thinner than they would be for an equivalent PWR.

The separation of the power production from the reactor ought also to lend itself to the reactor being placed underground and less susceptible to mischief. This is especially feasible because fuel replacement ought not be required for decades. This last is the (almost) no muss feature. Disclaimer: to my knowledge, TerraPower has not indicated they will use the underground installation feature.

The “almost” qualifier in the “no muss” is in part because, while the fuel is benign for transport, the neutrons for reacting the U-238 are most easily created using some U-235. Think pilot light for a burner. Natrium uses uranium enriched to 16-19% U-235. However, as expected for a fast reactor, more of the charge is burnt. Natrium reportedly produces 72% less waste. These details support the fact that, their other attributes notwithstanding, SMRs do produce spent fuel for disposal although with less frequency in some concepts, especially breeders, and this is the other reason for the “almost” qualifier.

As in all breeders, no matter what the starting fuel is, additional fuel could in principle be depleted uranium. This is the uranium left over after removal of the U-235, and it is very weakly radioactive. Nearly a ton of it was used in each of the old Boeing 747s for counterweights in the back-up stabilization systems. It was also used (probably still is) in anti-tank missiles because the pyrophoricity of U caused a friction induced fire inside the tank cabin after penetration. Apologies for the ghastly imagery, but war is hell.

Advanced SMRs could play an important role in decarbonization of the grid. My personal favorites are those that use thorium as fuel, such as the ThorCon variant which they are launching in Indonesia. Thorium is safe to transport, relatively abundant in countries such as India, and the fission products do not contain plutonium, thus avoiding the risk of nuclear weapon proliferation.

As in most targets of value, we must follow the principle of “all of the above”*.

Vikram Rao, December 26, 2024

*All together now, from All Together Now, by The Beatles (1969), written by Lennon-McCartney

Drill Baby Drill, Drill Hot Rocks

December 5, 2024 § Leave a comment

“Drill baby drill” is being bandied around, especially post-election, reflecting the views of the president-elect. Thing is, though, baby’s already been drilling up a storm. World oil consumption was at an all-time high in 2023, breaking the 100 million barrel per day (MMbpd) barrier. And the International Energy Association (IEA) projects further demand growth, to about 106 MM bpd by 2028. The IEA also projects the US as the largest contributor to the supply, provided the sanctions on Russia and Iran continue.

Courtesy the International Energy Association

To execute the stated intent to stimulate US production, all that the new White House needs to do is not mess with the sanctions. For ideological reasons they may be tempted to open the Alaskan National Wildlife Refuge to leases. But none of the majors will come, and not even the larger independents. Easier pickings in shale oil and in wondrous new opportunities such as in Guyana. Is it still a party if nobody comes?

Note in the figure above that the projection by the IEA has roughly the same slope as the pre-pandemic period, with a bit of a dip in the out years ascribed to electric vehicles. And if that were not enough, world coal consumption hit a historic annual high of 8.7 billion tonnes in 2023, despite Britain, which invented the use of coal, closing its last mine this year. The largest increases were in Indonesia, India and China, in that order. Let me underline, both oil and coal hit all-time highs in usage last year. So much for the great energy transition.

So, what gives? China and India, two with the greatest uptick in coal usage, need energy for economic uplift, and for now that means coal for them, since they are net importers of oil and gas. Consider though that the same countries are numbers 1 and 3 in rate of adoption of solar energy. What this means is that solar and wind cannot scale fast enough to keep up with the demand. Making matters worse is the ever-increasing demand created by data centers.

One reason for not keeping up with demand is land mass required. Numbers vary by conditions, especially for wind, but solar energy needs about 5 acres per MW, while wind on flat land typically needs about 30 acres per MW. Compare that to a coal generating plant, which is 0.7 acres per MW (without carbon capture). Wind also tends to be far from populated areas, so transmission lines are needed, and much wind energy is curtailed due to those not being readily constructed. To add to the complication, both solar and wind plants have low capacity factors, under 40%. So, nameplate capacity is not achieved continuously, and augmentation is needed with batteries or other storage means. Finally, governments would like the communities with retired coal plants to benefit from the replacements. This is hard at many levels, not the least being availability of land mass, and because the land area required is many times that which was occupied by the coal plant being replaced. All this holds back scale.

Geothermal Energy. Two types of firm (high capacity factors) carbon-free energy that fit the bill in terms of land mass, are geothermal energy and small modular reactors. Here we will discuss just the former, which involves drilling wells into hot rock, pumping water in and recovering the hot fluid to drive turbines. Fervo Energy, in my opinion the leading enhanced geothermal (EGS) company (disclosure: I advise Fervo, and anything disclosed here is public information or my conjecture), has been approved for a 2 GW plant in Utah, which has a surface footprint of 633 acres. This calculates to about 0.3 acres per MW. The footprint of Sage Geosystems is also similar. Sage also has an innovative variant which takes advantage of the poroelasticity in rock, and which could provide load following backup storage for intermittency in solar and wind, thus enabling scale in a different way.

Aside from the favorable footprint of Fervo emplacements (incidentally, the underground footprint is significant because each of the over 300 wells is about a mile long), the technology is highly scalable for the following reasons. All unit operations are performed by oilfield personnel with no additional training, and therefore, readily available. Certainly, the technology is underpinned by unique modeling (developed in large part in the Stanford PhD thesis of a founder), but the key is that when oil and gas production eventually diminishes, the same personnel can be used here. In fact, an oil and gas company could have geothermal assets in addition to their oil and gas ones, and simply mix and match personnel as dictated by demand.

The shale oil and gas industry found that when multiple wells were operated on “pads”, cost per well came down significantly. Those learnings would apply directly to EGS. Accordingly, I would expect EGS systems at scale to deliver carbon free power, 24/7/365, at very favorable costs.

Governments and investors ought to take note that EGS variants are possibly the fastest means for economically displacing coal, and eventually oil. In the case of the latter, even that displacement does not eliminate jobs.

As the title revealed, the refrain now changes a bit to: Drill baby drill, drill hot rocks*.

Vikram Rao

* Lookin’ for some hot stuff, baby, in Hot Stuff by Donna Summer, 1979, Casablanca Records

AI Will Delay the Greening of Industry

October 31, 2024 § Leave a comment

Artificial Intelligence (AI), and its most recent avatar, generative AI, holds promise for industrial efficiency. Few will doubt that premise. How much, how soon, may well be debated. But not whether. In the midst of the euphoria, especially the exploding market cap of Nvidia, the computational lynchpin, lurks an uncomfortable truth. Well, maybe not truth, but certainly a firmly supportable view: this development will delay the decarbonization of industries, especially clean energy alternatives such as hydrogen, and the so-called hard to abate commodities, steel and cement.

The basic argument is simple. Generative AI (Gen AI) is a power hog. The same query made on a conventional search uses nearly 10 times the energy as when it is using Gen AI. This presumption is premised upon an estimate made on an early ChatGPT model, wherein the energy used was 2.9 watt hours for the same query which used 0.3 watt hours on a Google search. The usage gets worse when images and video are involved. However, these numbers will improve, for both categories. Evidence for that is that just over a decade ago, data centers were the concern in energy usage. Dire predictions were made regarding swamping of the electricity grid. The power consumption in data centers was 194 terrawatt hours (tWh) in 2010. In 2018 it was 205 tWh, a mere 6% increase, despite the compute instances increasing by 550% (Massanet et al, 20201). The improvement was both in computing efficiency and power management.

More of that will certainly occur. Nvidia, the foremost chipmaker for these applications claims dramatic reductions in energy use in forthcoming products. The US Department of Energy is encouraging the use of low-grade heat recovered from cooling the data centers. A point of clarification on terminology: the cloud has similar functionality as a data center. The difference is that data centers are often physically linked to an enterprise, whereas the cloud is in a remote location serving all comers. We use the terms interchangeably here. Low-grade heat is loosely defined as heat at temperatures too low for conventional power generation. However they may be suitable for a process such as desalination with membrane distillation, and the regeneration of solvents used in direct air capture of carbon dioxide.

Impact on De-carbonizing Industry

The obvious positive impact will be on balancing the grid. The principal carbon-free sources of electricity are solar and wind. Each of these is highly variable in output, with capacity factors (the time spent by the capital in generating revenue) less than 30% and 40%, respectively. The gaps need filling, each gap filler with its vagaries. AI will undoubtedly be highly influential in optimizing the usage from all sources.

The obverse side of that coin is the increasing demand for electricity by the data centers supporting AI of all flavors. The Virginia based consulting company ICF predicts usage increasing by 9% annually from the present to 2028. Many data center owners have announced plans for all energy used being carbon-free by 2030. Carbon-free electricity capacity additions are primarily in solar and wind, and each of these requires temporal gap filling. Longer duration gaps (over 10 hours) are dominantly filled by natural gas generators. A major effort is needed in enabling the scaling of carbon-free gap fillers, the most viable of which are innovative storage systems (including hydrogen), advanced geothermal systems and small modular reactors (SMRs).

The big players in cloud computing have recognized this. Google is enabling scaling by purchasing power from the leading geothermal player Fervo Energy and is also doing the same with Hermes SMRs made by Kairos Power. An interesting twist on the latter is that the Hermes SMR is an advanced reactor of the class known as pebble bed reactors, using molten salt cooling (as opposed to water in conventional commercial reactors). It uses a unique fuel that is contained in spheres known as pebbles. The reaction products are retained in the pebble through a hard coating. This is not the place to discuss the pros and cons of the TRISO fuel used, except to note that it utilizes highly enriched uranium, much of which is currently imported from Russia. Google explicitly underlines part of the motivation being to encourage scaling SMRs. This is exactly what is needed2, especially for SMRs, whose promise of lower cost electricity is largely premised upon economies of mass production replacing economies of scale of the plant.

Microsoft has taken the unusual (in my view) step of contracting to take the full production from a planned recommissioning of the only functional reactor at the Three Mile Island (conventional) nuclear reactor facility. In my view, conventional reactors are passe and the future is in SMRs. The most recent new conventional ones commissioned are two in Georgia. The original budget more than doubled, and the plants were delayed by 7 years. Par for the course for nuclear plants. Microsoft is certainly aware of the importance of SMRs, in part because founder Bill Gates is backing TerraPower, using an advanced design breeder reactor with liquid sodium as the coolant, and molten salt for storage. The “breeder” feature3 involves creation of fissile Pu239 by neutrons from an initial core of enriched U colliding with U238 in the surrounding depleted U containment. The reactions are self-sustaining, requiring no additional enriched U. The operation can be designed to operate over 50 years without intervention. Accordingly, it could be underground. The design has not yet been permitted by the NRC but holds exceptional promise because the fuel is essentially depleted uranium (ore from which fissile U has been extracted) and the issue of disposal of spent fuel is avoided.

Impact on Green Hydrogen, Steel and Cement

Hydrogen as a storage medium, alternative fuel, and feedstock for green ammonia, has a lot of traction. One of the principal sources of green hydrogen is electrolysis of water. But it is green only if the electricity used is carbon-free.

Similarly, steel and cement are seeking to go green because collectively they represent about 18% of CO2 emissions. Cement is produced by calcining limestone, and each tonne of cement produced causes about a tonne of CO2 emissions. Nearly half of that is from the fuel used. Electric heated kilns are proposed, using carbon-free electricity. Similarly, each tonne of steel produced causes emission of nearly 2 tonne CO2. A leading means for reduction of these emissions is the use of hydrogen to reduce the iron oxide to iron, instead of coke. Again, the hydrogen would need to be green and only high-grade iron ore is suitable, and it is in short supply worldwide. A recent innovation drawing considerable investor interest is electrolytic iron production. This can use low-grade ore. But for the steel to be carbon-free, the electricity used must be as well.

The world is increasingly electrifying. It runs the gamut from electrification of transportation to crypto currency to decarbonization of industrial processes. All these either require, or aspire to have, carbon-free sources of electricity. Now, AI and its Gen AI variant is adding a heavy and increasing demand. Many of these share a common trait: the need for electricity 24/7/365. In recognition of the temporal variability in solar and wind sources, the big players are opting for firm carbon-free sources such as geothermal and SMRs. That is the good news, because they will enable scaling of a nascent industry. The not so good news for all the rest is that these folks have deep pockets and are tying up supply with contracts. Remains to be seen how a startup in green ammonia or steel will compete for carbon-free electricity.

AI could well push innovation in industrial de-carbonization to non-electrolytic processes.

Vikram Rao

October 31, 2024

1 Massanet et al. 2020 Recalibrating global data center energy-use estimates. Science, 367(6481), 984–986.

2,3 pages 53 and 12 https://www.rti.org/rti-press-publication/carbon-free-power

TRADER JOE BIDEN

May 26, 2024 § 2 Comments

President Joe Biden is in the oil trading game. To date he has bought low and sold high, an enviable record. He has used the Strategic Petroleum Reserve (SPR) as a tool for stabilizing oil price continually, and not just in a supply crisis. His nuanced policy on minimizing Russian oil sale profits has not caused a supply-disruption-led oil price rise. In the last two years, transport fuel price has been stable in the US, and domestic oil production has been high. Some folks think he is perpetuating fossil fuel in achieving the latter. Not so. Shale oil wells are notoriously short lived. Other folks think he is taking an inspired gamble with our energy security. He is not. Abundant, accessible, shale oil is our security. And conventional oil traders must be haters. Riding volatility waves is their skill.

When President Biden authorized withdrawal of 180 million barrels (MM bbls) from the SPR in 2022, there were howls of anguish from many sides. The SPR was a reserve, for emergencies, not the sitting president’s piggy bank, he was placing the country at risk and so on. At the time I wrote a blog supporting the drawdown, which entailed 1 MM bbl per day withdrawal for 180 days. My support was premised on the argument that the SPR was no longer necessary at the design level of 714 MM bbls. At the time it was conceived in 1973 (and executed in 1975), we were importing 6.2 MM bbl per day. In 2022 we were a net exporter by a small margin. But the story is better than that. We import heavily discounted heavy oil and export full price light crude. Again, buying low, selling high.

The chart shows the SPR levels over the years. Note the plummet in 2022. In February 2024 it was at 361 MM bbls. This is ample in part because much of domestic production is shale oil, and new wells can be brought on stream within a few weeks. Shale oil is, in effect, our strategic reserve. One argument against that assertion is that many of the operators are small independent producers, who are averse to taking risk with future pricing, and may need inducements.

Biden’s Gambit

Enter President Biden into the quandary. He needs gasoline prices to remain affordable. But he also needs the shale oil drillers to keep at it for the nation to continue to enjoy North American self-sufficiency in oil (domestic production plus a friendly and inter-dependent partner Canada). Gas is a horse of a different color. The US has gone from an importer of liquefied natural gas (LNG) to the largest exporter in the world in just 15 years. American LNG is key to reduced European reliance on Russian gas. How this is reconciled against renewable energy thrusts, is a topic unto itself for another time.

He ordered the SPR release described above. The average price of the oil in the reserve was around USD 28 per bbl. He sold it at an average price of USD 95. All SPR oil is not the same quality, and depending on which tranches were sold, the selling price could have been less for any given lot. On average not a shabby profit. Then in July last year, when the price was USD 67, he refilled the SPR some (see the small blip upward on the chart). In so doing he fulfilled a commitment he made to drillers back in 2022 that he would buy back if the price dropped to the USD 67 – 72 range. Such purchases would, of course, have some impact on raising prices. The mere intent, taken together with the fact that the SPR had sufficient capacity to add 350 MM bbls, would give the market a measure of stability, a goal shared by OPEC, albeit at levels believed to be in the mid-80s.

The purchase in July 2023 was for about 35% of the amount he sold in 2022. The reported profit was USD 582 MM. According to Treasury, the 2022 sale caused a drop in gasoline price of USD 0.40 per gallon. In an election year. And the mid-term election went more blue than expected. Political motivations aside, the tactical use of the SPR to stabilize gasoline prices and at the same time keep domestic industry vibrant, is a valid weapon in any President’s arsenal. As noted earlier, an SPR at a third of the originally intended levels is now adequate as a strategic reserve. Any fill above that level could be discretionary.

Biden’s gambit went a step further. Prices were declining in October 2023. Biden unveiled a standing offer to buy oil for the SPR at a price of USD 79 for up to 3 MM bbls a month, no matter the market price at the time. For the producer this was a hedge against lower prices. While in world consumption terms this was the proverbial drop in the bucket (uhh, barrel), the inducement worked. Investment is reported to have tripled in the period following the offer.

Russian Oil

The Russian invasion of Ukraine prompted actions intended to reduce Russian income while not causing a rise in the world oil price. A combination of sanctions and price caps has certainly achieved the second goal. Russia was forced to sell oil through secondary channels and India became a large buyer, initially at heavily discounted prices. India then refined the oil and sold into all markets, including US and allies. Blind eyes got turned. At first. Now there are additional sanctions. As I noted before, US policy was nuanced. But world prices remained stable and US production thrived.

Trader Joe Biden has shown how deft buying and selling oil can utilize the SPR to achieve national objectives while making a profit*. And in so doing, not relinquish the strategic objective of it as a reserve against extraordinary supply shocks. Future presidents will take note.

* You’ve got to know when to hold ‘em, know when to fold ‘em, in The Gambler, by Kenny Rogers (1978), written by Don Schlitz.

Vikram Rao

May 26, 2024

Wolfpack Déjà vu?

April 1, 2024 § Leave a comment

The last time the NC State men’s basketball team slipped into the NCAA tournament, they made the selectors look like clairvoyants. The selection was roundly criticized. Until the Pack cut down the nets on April 4, 1983. Are we set for a Wolfpack déjà vu?

This time there was no argument about being included. Winning the ACC tournament gave them the slot by rule. A strong belief was that but for this, the team would not have been selected. This belief was bolstered by the paltry 11th seeding given to the team. Even that other time, they received a 6th seeding. Lowly, but not bottom of the drawer.

The ACC certainly was under underappreciated this time around. A perennial powerhouse, they only got 4 selections and the mandatory one for NC State. Yet, 4 reached the Sweet 16. So, the ACC Tournament victor could have been presumed to have some chops. Indeed, that has been proven to be the case, reaching the Final 4 even from a lowly 11 seed position. They beat Duke to get there. A different pairing, and who knows, the ACC might have had two in the Final Four, as was the case two years ago.

In that 1983 tournament, Houston and Louisville were considered to the class of the affair. The luck of the draw had them meet in the semifinal, which was widely considered to be the “true final”. The matchup exceeded all expectation on entertainment. Both teams had high-flying forwards and much of the match seemingly was played above the rim. Houston won that game, led by Clyde Drexler and Akeem (later named Hakeem) Olajuwon. Clyde had been a lightly recruited local Houstonian and Akeem was relatively new to the sport from Nigeria. The title was seen as a mere formality against the surprising NC State Wolfpack.

Jim Valvano, the coach of the Wolfpack, had one key thing going for him. He could slow down the high flying by holding onto the ball. These were the days before the shot clock. Almost as boring as baseball. Or cricket before they wised up and invented the limited overs format, resulting in the sport catapulting into the number two spot in revenue behind the NFL. Baseball is still fiddling with little tweaks.

Back to the championship game. These are my personal memories from watching the game and may be wanting in some respect. Houston had scored 94 points in the semifinal game. The moribund pace of this one had thrown them off their game. The score was tied at 52 with a few seconds left in the game. The Pack had the ball, guarded by Reid Gettys of Houston. A tall guard, he was making it difficult on the shot. But, even with a foul to give, he did not foul intentionally prior to the shot. The player got off a desperation shot. Under the basket, in perfect rebounding position in front of the basket was Olajuwon. The Pack’s Lorenzo Charles was forced to occupy a less choice spot. The shot missed everything and fell into the hands of Lorenzo Charles. He gratefully dunked the ball and that was all she wrote. This was also the last time in the NCAA tournament that the winner did not produce the MVP. That award went to Olajuwon.

I was rooting for my hometown team Houston. So, dredging up these memories are not bereft of angst. But today, as a resident of the Triangle, I am rooting for the Pack. And that earlier game serves to remind that conventional wisdom does not always prevail. And who knows, maybe lightning will strike again*.

Vikram Rao

*Lightning is striking again, in Lightnin’ Strikes by Lou Christy (1966), written by Lou Christy and Twyla Herbert.